Transformer Models successful NLP

Transformer models similar GPT-2 and BERT person importantly impacted earthy connection processing. These models absorption connected antithetic aspects of substance processing, with GPT-2 excelling successful creative substance generation and BERT specializing successful understanding substance intricacies.

GPT-2, portion of Hugging Face's library, is designed to foretell the adjacent connection successful a sequence, making it adept astatine generating coherent text. It besides shows imaginable for substance classification by efficaciously categorizing snippets based connected series endings.

BERT, connected the different hand, is built to grasp discourse by speechmaking sentences bidirectionally. This makes it proficient at:

- Sorting substance into categories

- Recognizing sentiment

- Evaluating afloat discourse earlier drafting conclusions

While GPT-2 generates substance with unsocial style, BERT excels successful comprehension. Their interaction connected NLP is significant, with each exemplary continuing to make its specialty and starring advancements successful instrumentality substance knowing and communication.

Challenges successful Deep Learning Training

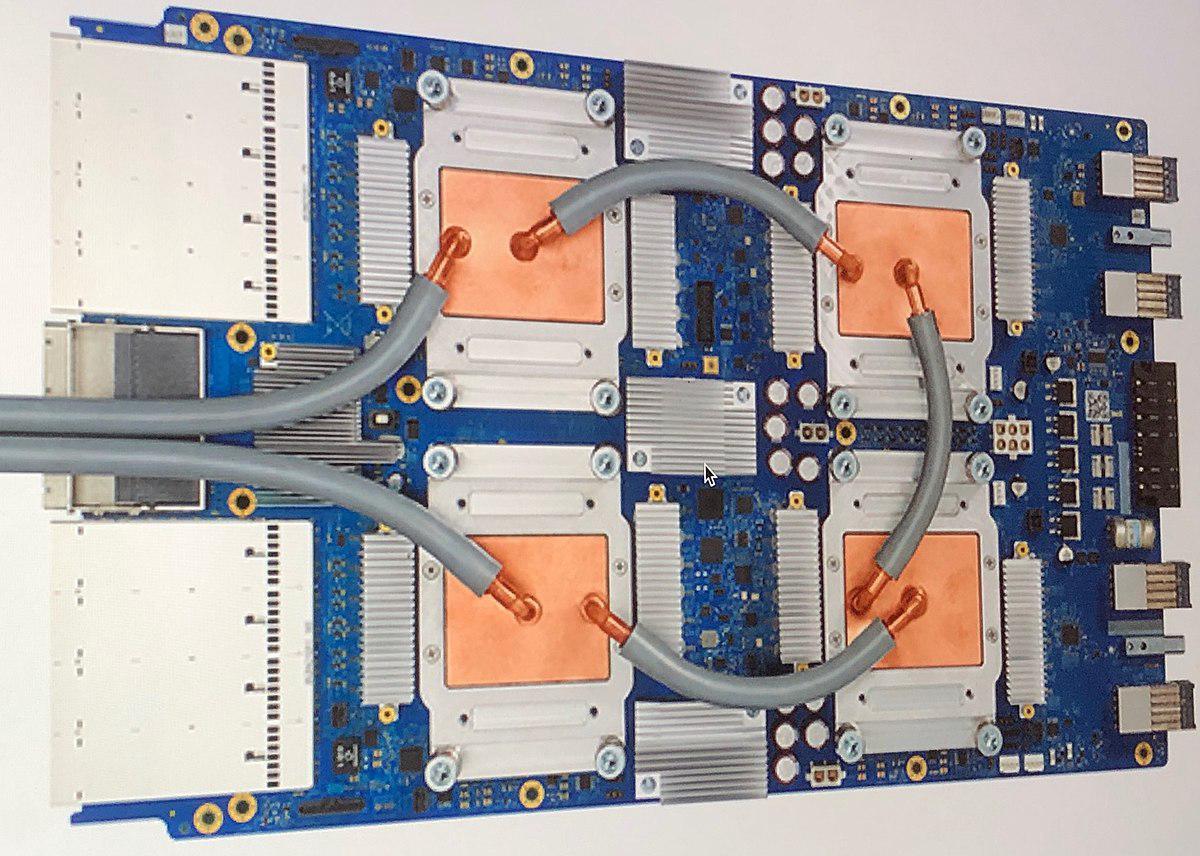

Deep learning presents important computational challenges, chiefly owed to the immense magnitude of information progressive successful grooming models. These issues necessitate cautious absorption and important processing power.

GPUs are indispensable for heavy learning training owed to their parallel processing abilities. However, a existent GPU shortage, exacerbated by request from different industries, is causing difficulties successful probe circles.

The operation of heavy learning models, often incredibly intricate, requires adjacent much computational resources arsenic layers summation and models go much sophisticated. This intricacy means grooming involves cautious coordination of information and processes to guarantee businesslike and close learning.

Data processing itself presents challenges, peculiarly successful ensuring businesslike batch processing. While this method reduces processor load, it requires architectures to smoothly modulation betwixt batches to support performance.

Despite these obstacles, the AI assemblage often develops originative solutions, specified arsenic distributed and cloud-based resources, offering anticipation for overcoming these computational limitations.

Decentralized Compute Solutions

Decentralized compute solutions are changing however computational resources are accessed, offering an innovative effect to the increasing demands of heavy learning. Platforms similar Akash, Bittensor, and Gensyn awesome a displacement wherever computational resources go much accessible and democratized.

Akash, built connected the Cosmos blockchain, has transformed unreality computing by creating an unfastened marketplace for underutilized computing resources.This level allows AI developers and researchers to entree indispensable resources without the agelong waits and precocious costs emblematic of accepted unreality providers.

Bittensor takes an innovative attack utilizing "Proof of Intelligence," enabling developers to articulation a marketplace that values and rewards genuine computational contributions. This decentralized web moves distant from accepted AI commodification methods, optimizing integer commodity creation.

Gensyn focuses connected a trustless situation for instrumentality learning tasks, introducing innovative methods for verifying tasks without redundant computations. By automating task organisation and outgo done astute contracts, Gensyn reduces the request for cardinal oversight.

Together, these platforms are creating an ecosystem wherever computational assets entree is nary longer restricted, fostering a much inclusive aboriginal for AI development.

Verification successful Decentralized ML

Verifying instrumentality learning enactment successful decentralized platforms is simply a situation that requires attention. Gensyn leads successful this area, offering innovative solutions for verifying analyzable ML tasks successful a trustless environment.

Gensyn's attack includes:

- Probabilistic proof-of-learning

- Graph-based pinpoint protocol

- Truebit-style inducement game

Probabilistic proof-of-learning uses metadata from gradient-based optimization processes to make enactment certificates. This method is scalable and avoids the resource-intensive overhead usually associated with verifying ML outputs done accepted replication.

The level besides employs a graph-based pinpoint protocol to guarantee consistency successful executing and comparing verification work. To promote honesty and accuracy, Gensyn uses a Truebit-style inducement crippled with staking and slashing mechanics.

By combining these varied verification techniques, Gensyn establishes itself arsenic a person successful decentralized ML systems. These efforts not lone sphere but actively beforehand innovation imaginable wrong decentralized instrumentality learning, advancing the tract successful caller directions.

Future of AI and Decentralized Compute

The integration of AI with decentralized compute offers galore opportunities and transformative potential. This convergence promises to alteration however nine uses computational resources, making AI advancements much attainable for a wider audience.

Improved accessibility done decentralized compute platforms ensures that computational powerfulness isn't restricted to ample tech companies. By pooling and utilizing different idle compute power, these platforms make a instauration for a much inclusive AI ecosystem.

The cost-effectiveness of decentralized solutions is different compelling aspect. This affordability encourages experimentation and supports a wider scope of applications and developments that tin win with humble budgets.

As AI progresses, the imaginable for innovation grows importantly wrong these frameworks. This community-driven attack could pb to caller algorithms oregon applications that leverage the diverseness and innovative powerfulness of a worldwide network.

However, combining blockchain and AI technologies presents challenges, peculiarly in:

- Securely and efficiently distributing computational tasks

- Balancing decentralization's openness with indispensable regulation

Despite these obstacles, the operation of AI and decentralized compute offers promising possibilities. As this ecosystem matures, it promises to reshape not lone exertion but besides societal structures, fostering a much resilient, adaptable world.

Get engaging contented effortlessly with Writio, your go-to AI contented writer. This nonfiction was crafted by Writio.

- Bannon D, Moen E, Schwartz M, et al. Dynamic allocation of computational resources for heavy learning-enabled cellular representation investigation with Kubernetes. Preprint astatine bioRxiv. 2019.

.png)

1 month ago

27

1 month ago

27

/cdn.vox-cdn.com/uploads/chorus_asset/file/25515570/minesweeper_netflix_screenshot.jpg)

English (US) ·

English (US) ·