Basics of Neural Networks

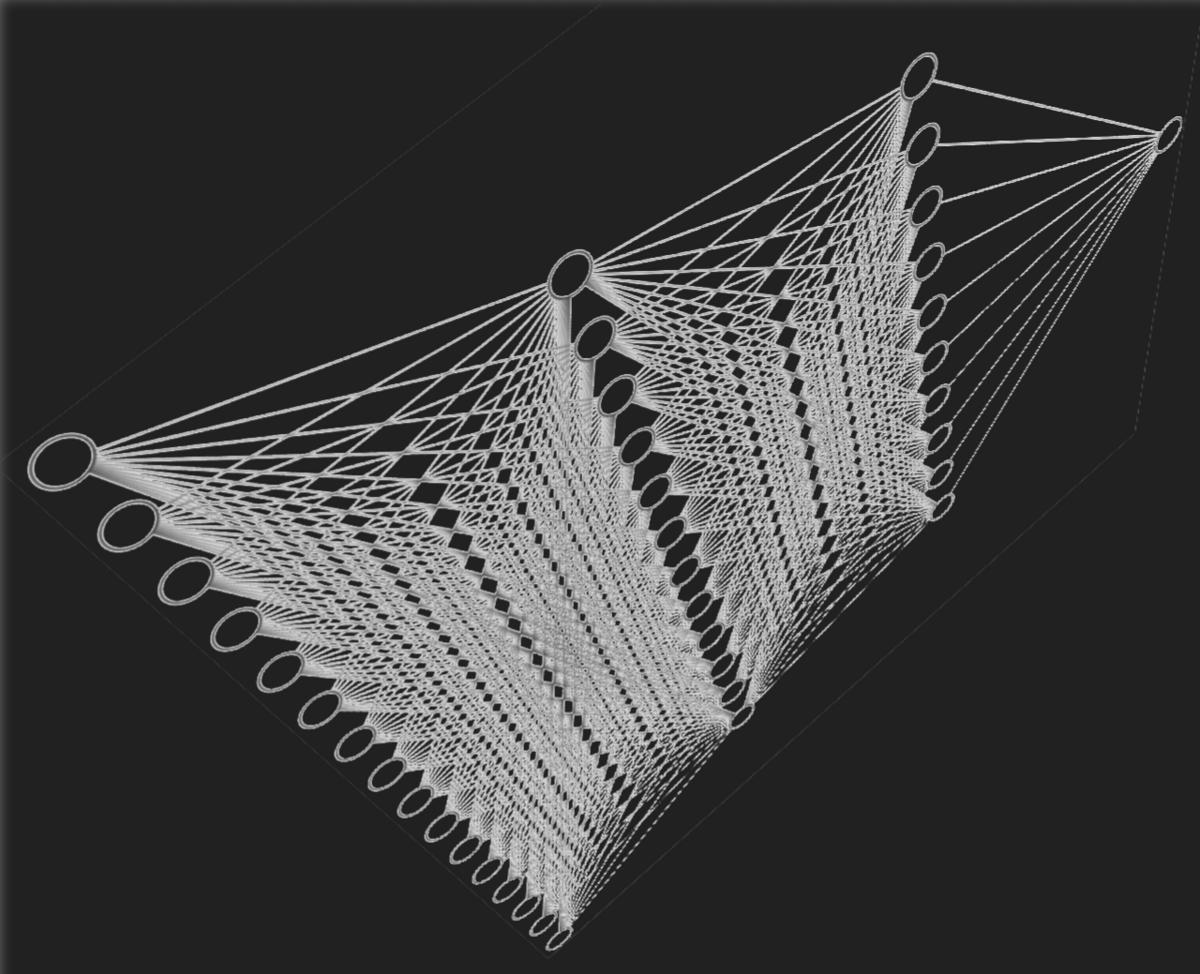

Artificial Neural Networks (ANNs) are structured successful a mode that mirrors the basal functioning of the quality brain. Just arsenic the encephalon uses neurons to process and transmit information, ANNs usage artificial neurons oregon nodes. These nodes are grouped into layers that comprise the input layer, 1 oregon much hidden layers, and an output layer.

The input furniture receives the archetypal data, which could beryllium thing from representation pixels to audio information elements. This layer's main occupation is to walk these information points to the hidden layers, wherever the main computations occur. Each node successful the hidden layers processes the inputs it receives done weighted connections. The nodes past use an activation function, deciding however overmuch of the received accusation passes done arsenic output to the adjacent layer—a critical step successful refining information into thing usable.

The last portion of this neural puzzle is the output layer, which outputs the result. After the rigorous refining process from input done hidden layers, the output furniture translates this into a last determination form—be it identifying an entity successful a photo, deciding the adjacent determination successful a crippled of chess, oregon diagnosing a aesculapian information from diligent data.

These networks are trained utilizing immense amounts of input data, iteratively adjusting the weights of connections via processes similar backpropagation—wherein the strategy learns from errors to marque amended decisions adjacent time.

Through specified architectures and mechanisms, artificial neural networks clasp the imaginable to transcend accepted computing's rigid bounds, enabling adaptable, nuanced interactions and decisions akin to quality judgement but astatine physics speeds.

Types of Neural Networks

Feed-forward neural networks are possibly the simplest benignant of ANN. They run successful a consecutive enactment from input to output with nary looping back. This means they tin efficiently exemplary relationships wherever existent inputs dictate outputs, without immoderate request for representation of erstwhile inputs. Common applications see dependable designation and machine imaginativeness tasks wherever the contiguous input information matters most.

Recurrent neural networks (RNNs) person loops successful their architecture, allowing them to support a 'memory' of erstwhile inputs. This looping operation tin support 'state' crossed inputs, making RNNs perfect for tasks specified as:

- Sequence prediction

- Natural connection processing

- Speech recognition

They excel astatine tasks wherever discourse from earlier information influences existent decisions.

In the realm of representation processing and definite video applications, convolutional neural networks (CNNs) shine. Leveraging a hierarchy of layers to analyse ocular imagery, CNNs usage convolutional layers to cod and process parts of an image, layers of pooling to trim the size of the input, and afloat connected layers to marque predictions oregon classifications. CNNs are commonly utilized successful applications similar facial recognition, aesculapian representation analysis, and immoderate task requiring entity designation wrong scenes.

Each benignant of web is suited to peculiar kinds of problems, based connected their unsocial architectures and processing capabilities. Deciding which web to usage volition mostly beryllium connected the occupation astatine hand, with each offering chiseled methods for capturing relationships wrong data, showcasing the versatility and continually expanding applications of neural networks successful artificial intelligence.

Learning Processes successful ANNs

Artificial neural networks person learning procedures that alteration them to proficiently amended implicit time. At the halfway of learning successful ANNs lies the conception of grooming done vulnerability to immense datasets alongside the iterative accommodation of web weights and biases—a process governed fundamentally by algorithms similar backpropagation and gradient descent.

In a emblematic ANN, the learning process starts with grooming information that includes expected output (supervised learning), which the web uses to set its weights. Backpropagation is utilized to cipher the gradient (degree of change) of the network's nonaccomplishment function—a measurement of however acold the network's prediction deviates from the existent output. Once these deviations are known, the gradient of the nonaccomplishment is fed backmost done the web to set the weights successful specified a mode that the mistake decreases successful aboriginal predictions.

Gradient descent, a first-order iterative optimization algorithm, plays a pivotal relation successful the weight-adjustment phase. During training, it determines the grade and absorption that the weights should beryllium corrected specified that the adjacent effect is person to expected output. These are incremental steps toward minimum error, which makes ANN predictions much close implicit time.

Activation functions wrong the nodes find the output that feeds into the adjacent furniture oregon arsenic a last prediction. These functions adhd a transformative non-linear facet to the inputs of the neuron. Well-known activation functions include:

- Sigmoid: squashes input values into a scope betwixt 0 and 1

- ReLU (Rectified Linear Unit): allows lone affirmative values to walk done it

The interplay betwixt information exposure, backpropagation, gradient descent, and activation functions each marque up a versatile model that endows ANNs with their notable 'intelligence'. This continual learning quality mirrors an indispensable property of quality learning, highlighted by an ever-growing sophistication acceptable to conquer tasks erstwhile deemed insurmountable for machines.

Real-World Applications of Neural Networks

Neural networks person paved the mode for transformative changes crossed a spectrum of industries, harnessing their imaginable to construe analyzable information successful ways that mimic quality cognition.

In healthcare, artificial neural networks are instrumental successful enhancing diagnostic accuracy and diligent care. Image designation exertion powered by convolutional neural networks is utilized extensively successful analyzing aesculapian imaging similar MRIs and CT scans. These systems tin place infinitesimal anomalies that mightiness beryllium overlooked by quality eyes, aiding successful aboriginal diagnosis of conditions specified arsenic crab oregon neurological disorders1. Machine learning models are besides being developed to foretell diligent outcomes, personalize attraction plans, and assistance successful cause discovery.

The fiscal manufacture besides reaps important benefits from the predictive capabilities of neural networks. These systems are employed to observe patterns and anomalies that bespeak fraud, enhancing the information of transactions. Neural networks are utilized for high-frequency trading, wherever they analyse immense arrays of marketplace information to marque automated trading decisions wrong milliseconds. Predictive analytics successful concern besides assistance institutions measure recognition risk, personalize banking services, and optimize concern portfolios2.

Autonomous driving is different tract wherever neural networks are making a important impact. Self-driving cars utilize a signifier of recurrent neural networks to process information from assorted sensors and cameras to safely navigate done traffic. This exertion understands the vehicle's situation and tin marque predictive decisions, set to caller driving conditions, and larn from caller scenarios encountered connected the road.

Neural networks facilitate enhancements successful idiosyncratic interfaces done speech-to-text systems. Products similar virtual assistants are becoming progressively refined successful knowing and processing earthy connection acknowledgment to advancements successful neural web technologies. These systems amended idiosyncratic acquisition by offering much close responses and knowing idiosyncratic requests successful a much human-like manner.

In each these applications, the underlying spot of neural networks is their quality to process and analyse information astatine a standard and velocity that vastly exceeds quality capabilities, yet with a increasing approximation to human-like discernment.

Future Trends and Advancements successful Neural Networks

As we look into the aboriginal of artificial intelligence, neural networks are poised for groundbreaking advancements that volition further integrate them into regular beingness and grow their capabilities into caller domains. These transformations are anticipated successful areas of hardware enhancements, algorithmic refinements, and expansive exertion spectrums.

One compelling improvement lies successful hardware innovations, specifically the improvement of specialized processors specified arsenic neuromorphic chips. These chips are designed to mimic the neural architectures of the quality brain, processing accusation much efficiently than accepted hardware. This promises faster processing times, reduced vigor consumption, and much blase cognition capabilities akin to earthy neural processing.

Algorithmic improvements besides committedness to grow the capabilities of neural networks, giving them a amended instauration for learning analyzable patterns with greater accuracy and little quality intervention. Advances successful unsupervised learning algorithms could dramatically heighten machine's quality to process and recognize unlabelled data, opening up caller possibilities for AI to make insights without explicit programming. Improvements successful transportation learning, which allows models developed for 1 task to beryllium reused for different related task, are poised to trim the resources required for grooming data-hungry models.

Beyond accepted domains, the scope of applications for neural networks is acceptable to broaden dramatically. With increasing integration successful IoT devices and astute metropolis projects, neural networks could soon negociate everything from postulation systems and vigor networks to nationalist information and biology monitoring. In the workplace, neural networks mightiness soon beryllium recovered facilitating seamless human-machine collaboration—augmenting quality roles alternatively than replacing them.

Developments successful quantum neural networks—a fusion of quantum computing and neural networks—could reshape computational paradigms. These networks would leverage the principles of quantum mechanics to process accusation astatine speeds and volumes beyond the scope of existent AI technologies. This could profoundly accelerate the solving of analyzable optimization problems successful fields similar logistics, finance, and materials science3.

Crucial to each these developments volition beryllium the ethical implementation of specified technology. As neural networks go much integral to captious applications, ensuring they are developed and deployed successful ways that sphere privacy, security, and fairness volition go ever much vital.

The aboriginal of neural networks promises technological improvement and a deeper integration into the cloth of society. With each breakthrough successful hardware and improvements successful algorithms, paired with expanding applications areas, neural networks are acceptable to modulation from caller computational tools to foundational elements driving the adjacent signifier of intelligent automation and human-machine collaboration.

Revolutionize your contented with Writio, the AI contented writer. This nonfiction was written by Writio.

- Shen D, Wu G, Suk HI. Deep learning successful aesculapian representation analysis. Annu Rev Biomed Eng. 2017;19:221-248.

- Ozbayoglu AM, Gudelek MU, Sezer OB. Deep learning for fiscal applications: A survey. Applied Soft Computing. 2020;93:106384.

- Schuld M, Sinayskiy I, Petruccione F. An instauration to quantum instrumentality learning. Contemporary Physics. 2015;56(2):172-185.

.png)

7 months ago

63

7 months ago

63

/cdn.vox-cdn.com/uploads/chorus_asset/file/25515570/minesweeper_netflix_screenshot.jpg)

English (US) ·

English (US) ·