Introduction

In this task we are going to larn connected however to classify Youtube comments whether they are Ham oregon Spam. This broad measurement by measurement usher volition instrumentality you from wholly beginner to maestro the applicable implementation of the Naive Bayes Classifier: A Complete Guide to Probabilistic Machine Learning our erstwhile nonfiction if you person cheque connected it delight bash so.

What is Multinomial Naive Bayes Classifier

Multinomial naive Bayes Classifier is 1 of the substance Based Naive bayes classifier algorithm that is suitable for Text classification with balanced dataset. In this nonfiction we are going to applicable instrumentality the Multinomial Naive Bayes Classifier to classify whether the fixed YouTube Comment is simply a Ham oregon Spam,

Dataset Overview

The database for this illustration is taken from https://archive.ics.uci.edu/ml/machine-learning-databases/00380/

We usually modify the databases somewhat specified that they acceptable the intent of the course. Therefore, we suggest you usage the database provided successful the resources successful bid to get the aforesaid results arsenic the ones successful the lectures.

The dataset contains 5 CSV files successful The folder successful which we are going to usage them for our Training and Testing Purpose

The files are name:

Youtube01.csv

Youtube02.csv

Youtube03.csv

Youtube04.csv

Youtube05.csv

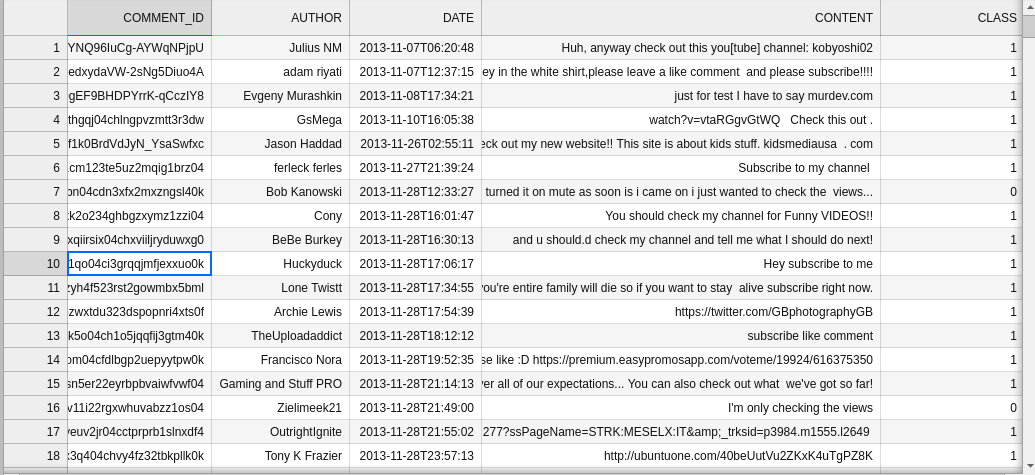

Here is the sneak highest of the dataset overview:

Import the indispensable Libraries

To get started with gathering our classifier , archetypal we request to import each indispensable python libraries which we are going to usage arsenic follows:

Python

Dataset Preprocessing

Here we are going to use assorted approaches which they are going to assistance to hole our dataset acceptable to beryllium acceptable to the exemplary for grooming and evaluation

Reading the Database(dataset)

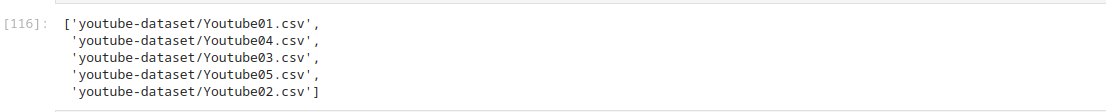

let drawback each the files successful our dataset arsenic a azygous pandas DataFrame truthful we tin easy enactment connected it, arsenic follows

Python

the Output looks thing similar this:

Then, Let articulation each the dataset into a azygous pandas DataFrame, we tin usage a for each loop to loop to each files and work them utilizing pandas, aft that adhd them to the azygous DataFrame arsenic rows.

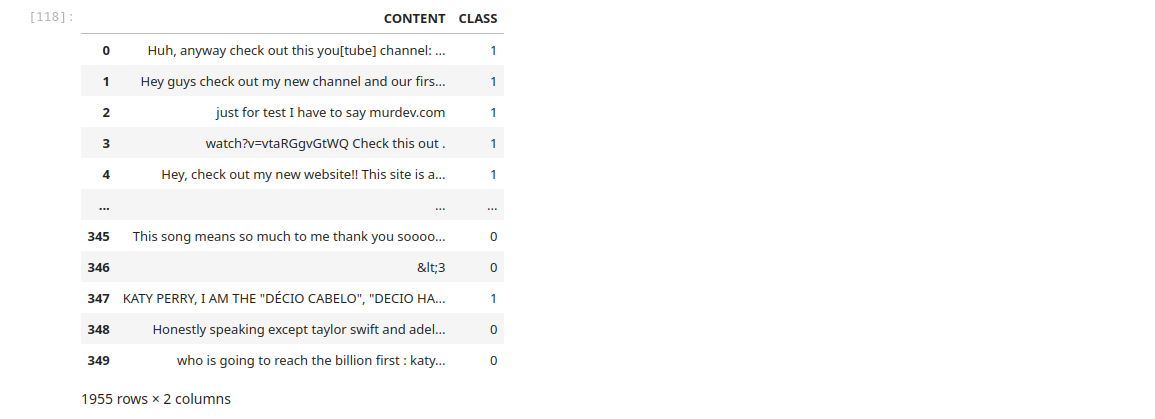

NOTE: we omitted the unnecessary columns (features) from the dataset specified arsenic ‘COMMENT_ID’, ‘AUTHOR’, ‘DATE’ and galore different file to lone stay with the import features required. besides we dropped the Index to guarantee that the caller rows added tends to travel the required sequences, the codification implementation is arsenic follows:

Python

Here is the illustration result for the data:

From this constituent present we tin cheque for immoderate null values successful the dataset. for the lawsuit of this dataset determination was nary immoderate null values, Here is the codification implementation:

Python

the output should look thing similar this 1 here:

Splitting the Dataset

Remember the train_test_split module we imported from sklearn.model_feature is the 1 which we are going to divided our database into trial acceptable and grooming set. But earlier that we person to abstracted the people signifier the Inputs for easier splitting and moving with the model

Separating Inputs from Target

Here we are going to abstracted our Dataset into Inputs and Target from our erstwhile Pandas information frame. Here is applicable codification implementation:

Python

Splitting the dataset into Training and Test Set

In this measurement we are required to disagreement our dataset into grooming and trial set. wherever the grooming acceptable is utilized for grooming the exemplary and the Test acceptable is utilized to measure the exemplary connected the unseen data. Here is the applicable codification implementation:

Python

Tokenizing the Dataset

This is precise important measurement since we are dealing with Text information which autumn successful the earthy connection processing perspective. Here tokenizing is going to assistance usage make a dictionary of worlds and number determination beingness successful the sentence.

NOTE :

- We lone use the fit_transform to the grooming dataset(x_train) since this is going to make a dictionary list

- we good beryllium required to usage the tokenizer to execute different translation to the trial information acceptable without fitting it and the usage it for prediction

Python

Training the model

This is the astir important portion of the instrumentality learning process, successful this measurement we are going to initialize the classifier and acceptable it to the grooming data, wherever it is going to larn from it and aboriginal we tin usage the exemplary successful accumulation oregon our trial acceptable to execute the evaluation

NOTE:

1. .FIT is utilized to acceptable the grooming dataset to the exemplary which successful different word is to bid the exemplary connected the trained data.

Here is the applicable codification implementation:

Python

The output would look like:

NB: successful the codification supra determination is an further parameter that is class_prior = np.array([0.6,0.4]) this was an optimization parameter that was added to the model. successful this nonfiction americium not going to sermon connected however we tin are going to optimize the model. to larn astir that delight cheque connected an nonfiction connected however to optimize a naive bayes classifier exemplary connected our tract oregon remark beneath successful the remark conception if you privation one see it successful this nonfiction we instrumentality those comments and assistance to modify the codification and amended this nonfiction to marque it much useful.

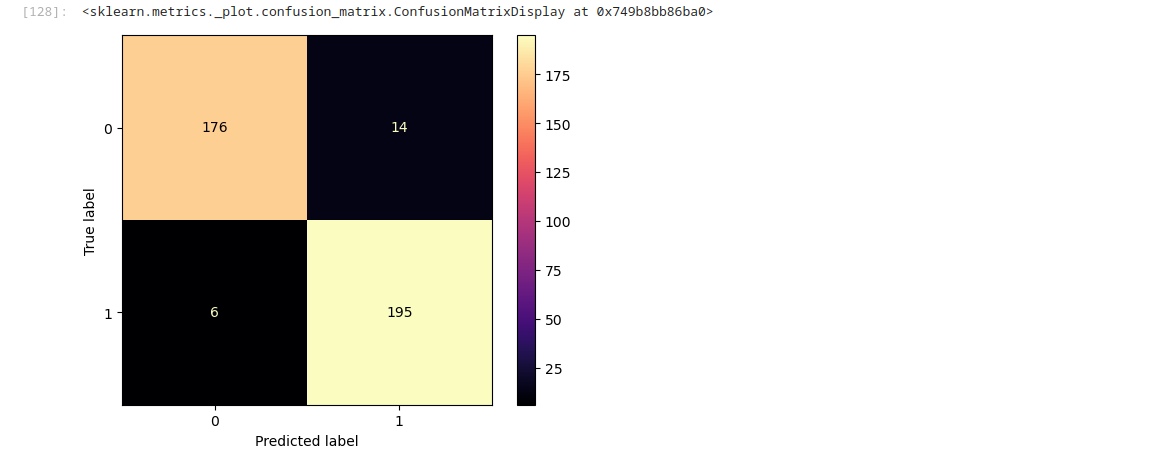

Evaluating the exemplary perfomance

In this measurement we are going to marque the exemplary valuation and show connected caller unseen information and spot if it springiness retired the astir reliable results that we tin usage to marque our ain accumulation acceptable system. for this valuation we are going to usage the erstwhile transformed trial set.

The codification implementation is arsenic follows:

Python

Here is the output:

NB: we intentionally did see overmuch accusation connected however to usage the disorder Matrix Display successful this nonfiction but inactive you tin larn connected however to usage the disorder 1 of our different nonfiction connected our tract dedicated to the disorder matrix beryllium definite to cheque out. it adds immoderate values to the to your instrumentality learning skills>

WAIT: In-case you deliberation it is indispensable to see it details successful this nonfiction you tin permission you remark beneath this volition assistance america to get your sentiment connected however to marque this nonfiction adjacent better.

Printing the classification report

Using the disorder matrix does not springiness in-depth knowing of the exemplary show we request different methods to recognize astir different metrics which see precision and Accuracy, Recall and F1-score. You tin get each those accusation by printing classification report.

The codification implementation of that:

Python

the output:

For much details connected however to usage the Classification Report delight cheque a dedicated nonfiction connected the classification study present connected our site

Performing Real satellite prediction

Final present we are acceptable to effort our exemplary connected the caller coming comments. present is however you tin instrumentality that,

Python

What Next ?

well convey you truthful overmuch for making it till the end. In this nonfiction were implemented naive bayes multinomial classifier algorithm measurement by measurement . and were capable to classify whether the fixed remark is ham (to mean good) oregon spam. The larn doesn’t extremity present we person truthful galore different articles related to instrumentality learning consciousness escaped to explore.Naive Bayes Classifier: A Complete Guide to Probabilistic Machine Learning

In-case you person faced immoderate difficult, delight marque a bully usage of the remark conception one volition idiosyncratic beryllium determination to assistance you erstwhile you are stuck. besides you tin usage the FAQs conception beneath to recognize more.

We deliberation sharing applicable implementation connected existent satellite illustration assorted instrumentality learning accomplishment is the cardinal constituent to mastery and besides lick assorted occupation that impact our nine we are intended to thatch you done applicable means if you deliberation our thought is good. Please and delight permission america a remark beneath astir your views oregon petition an article. arsenic accustomed don’t hide to up-vote this nonfiction and stock it.

Frequently Asked Questions (FAQs)

What is the Naive Bayes Classifier?

- A Naive Bayes Classifier is simply a probabilistic instrumentality learning algorithm based connected Bayes’ theorem, utilized for classification tasks.

How does the Naive Bayes algorithm work?

- It calculates the posterior probability of each people fixed the input features and selects the people with the highest probability.

What are the assumptions of Naive Bayes?

- The main presumption is that each features are conditionally autarkic fixed the people label.

What types of information tin Naive Bayes handle?

- It tin grip some continuous and discrete data, with variations similar Gaussian for continuous and Multinomial for discrete data.

In what applications is Naive Bayes commonly used?

- It is wide utilized successful substance classification, spam filtering, sentiment analysis, and proposal systems.

What are the advantages of utilizing Naive Bayes?

- Advantages see simplicity, speed, ratio with ample datasets, and bully show adjacent with constricted grooming data.

What are the limitations of Naive Bayes?

- Its superior regulation is the presumption of diagnostic independence, which whitethorn not clasp successful real-world scenarios.

How bash you measure the show of a Naive Bayes model?

- Performance tin beryllium evaluated utilizing metrics specified arsenic accuracy, precision, recall, and F1-score.

Can Naive Bayes beryllium utilized for multi-class classification?

- Yes, Naive Bayes tin efficaciously grip multi-class classification problems.

How bash you instrumentality a Naive Bayes Classifier successful Python?

You tin instrumentality it utilizing libraries similar Scikit-learn with functions similar `GaussianNB`, `MultinomialNB`, oregon `BernoulliNB`.

.png)

16 hours ago

2

16 hours ago

2

/cdn.vox-cdn.com/uploads/chorus_asset/file/25515570/minesweeper_netflix_screenshot.jpg)

English (US) ·

English (US) ·