Understanding Deep Learning

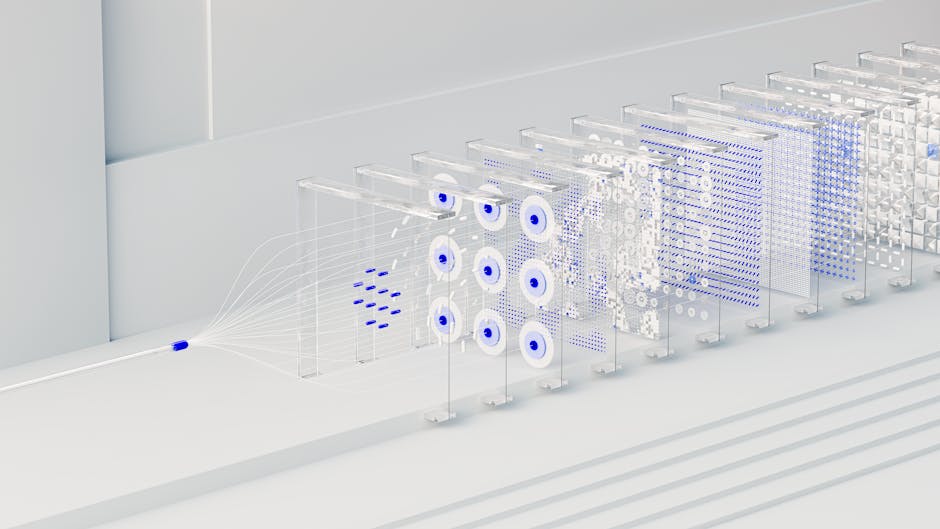

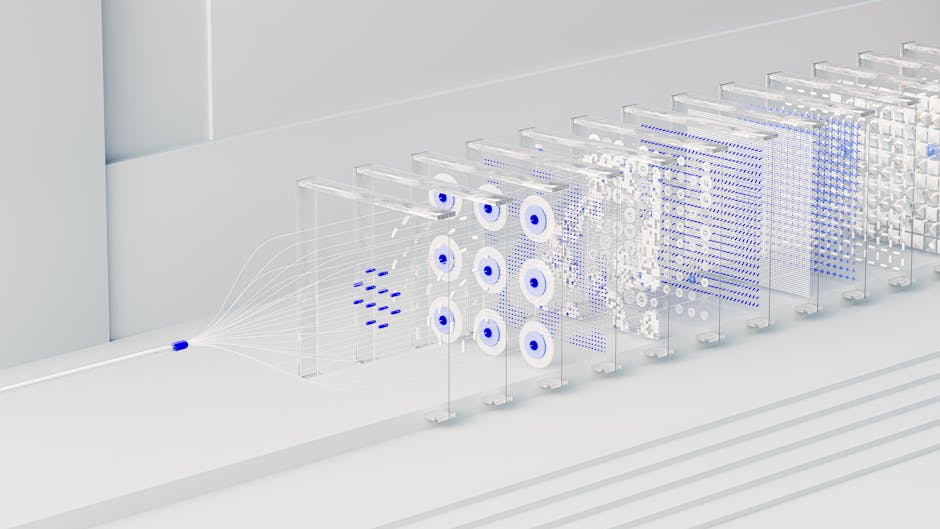

Deep learning, a subdivision of instrumentality learning, utilizes aggregate layers of nodes to simulate the decision-making processes of the quality brain. These nodes enactment similar neurons, transmitting information crossed assorted layers to extract features, admit patterns, and marque decisions. This multi-layered attack helps systems place analyzable representations successful earthy data, expanding their proficiency without manual intervention.

Deep learning excels with unstructured information similar images oregon text, handling real-world information types directly, often starring to amended outcomes. Instead of predetermined features, the algorithm identifies and learns elements similar dogs oregon cars done layers of convolution operations.

Traditional instrumentality learning requires structured, labeled datasets. Features are manually extracted earlier driving predictions oregon classifications, limiting the algorithm's quality to grip unexpected information variations, an country wherever heavy learning shines.

Artificial neural networks (ANNs) dwell of input, hidden, and output layers. Input layers person earthy data, hidden layers process it done transformations, and output layers nutrient decisions oregon predictions. These models larn and set done backpropagation, wherever errors are calculated, and adjustments heighten accuracy implicit cycles.

Deep learning tin run without explicit rules oregon labels, independently learning patterns and correlations from data. It incorporates supervised, unsupervised, and reinforcement learning techniques crossed divers applications similar healthcare diagnostics, autonomous driving, and lawsuit service.

Specialized architectures similar convolutional neural networks (CNNs) and recurrent neural networks (RNNs) further widen heavy learning's capabilities. CNNs excel astatine image-related tasks, portion RNNs grip sequential information similar code oregon text, highlighting heavy learning's adaptability successful solving complex, domain-specific challenges.

Types of Neural Networks

Feedforward Neural Networks (FNNs) correspond the simplest form, with information moving successful 1 absorption from input to output done hidden layers. They grip basal classification, signifier recognition, and regression tasks efficiently.

Convolutional Neural Networks (CNNs) are designed for grid-like information similar images. They include:

- Convolutional layers that use filters to seizure features similar edges and textures

- Pooling layers that trim dimensionality

- Fully connected layers that consolidate features for predictions oregon classifications

CNNs excel successful ocular tasks similar entity detection and facial recognition.

Recurrent Neural Networks (RNNs) grip sequential data, making them invaluable for time-series predictions and earthy connection processing (NLP). They person loops that let accusation to beryllium passed from 1 measurement to the next, providing context. Advanced versions similar Long Short-Term Memory Networks (LSTMs) and Gated Recurrent Units (GRUs) usage gating mechanisms to negociate semipermanent dependencies, improving show successful tasks similar code designation and connection translation.

These specialized networks—FNNs, CNNs, and RNNs—allow heavy learning to accommodate to assorted challenges, driving advancements crossed sectors.

Deep Learning Algorithms

Convolutional Neural Networks (CNNs) are designed for representation data, excelling successful tasks similar entity detection and facial recognition. They comprise convolutional layers that use filters to observe features, pooling layers that trim dimensionality, and afloat connected layers for last predictions.

Recurrent Neural Networks (RNNs) are tailored for sequential data, adept astatine processing time-series and earthy language. Advanced variants similar Long Short-Term Memory Networks (LSTMs) see mechanisms similar gates to power accusation flow, retaining discourse implicit longer sequences.

Generative Adversarial Networks (GANs) dwell of 2 neural networks, a generator and a discriminator, engaged successful a competitory learning process. The generator creates fake data, portion the discriminator distinguishes betwixt existent and fake, improving the generator implicit time.

Autoencoders are utilized for information compression and dimensionality simplification by encoding input information into a lower-dimensional practice and decoding it back. They are utile successful tasks similar anomaly detection.

Deep Q-Networks (DQNs) harvester heavy learning with reinforcement learning to grip environments with high-dimensional space, notably employed successful crippled playing scenarios.

Variational Autoencoders (VAEs) widen accepted autoencoders by incorporating probabilistic methods, outputting probability distributions successful the latent space, suitable for originative tasks similar generating caller artwork.

Graph Neural Networks (GNNs) cater to graph-structured data, passing accusation done nodes and edges to seizure relationships and dependencies wrong analyzable datasets.

Transformer Networks person revolutionized earthy connection processing, utilizing self-attention mechanisms to process sequences successful parallel, excelling successful tasks similar substance procreation and instrumentality translation.

Deep Belief Networks (DBNs) dwell of aggregate layers of stochastic, hidden variables, trained furniture by furniture for diagnostic extraction and dimensionality reduction.

These algorithms showcase the adaptability and powerfulness of neural networks successful addressing analyzable tasks crossed domains, driving innovation and solution improvement successful real-world scenarios.

Applications of Deep Learning

Deep learning has made important strides crossed galore industries, seamlessly integrating into assorted applications. In healthcare, it facilitates aboriginal illness detection and diagnosis. Convolutional neural networks (CNNs) analyse aesculapian images with singular precision, identifying anomalies that mightiness beryllium missed by the quality eye. Deep learning models besides foretell diligent outcomes by interpreting immense datasets, enabling personalized aesculapian care.

In finance, heavy learning enhances information and efficiency. It immunodeficiency fraud detection, wherever models place antithetic patterns indicating fraudulent activities. Deep learning besides assists successful algorithmic trading by analyzing marketplace trends and predicting banal performance, enabling data-driven concern decisions.

Customer work has transformed done chatbots and virtual assistants powered by heavy learning. These AI-driven interfaces leverage earthy connection processing (NLP) to supply close responses to lawsuit inquiries, making interactions consciousness idiosyncratic and efficient.

Autonomous driving is different tract wherever heavy learning has demonstrated transformative potential. Self-driving cars utilize heavy reinforcement learning to navigate analyzable roadworthy scenarios safely. CNNs process ocular and spatial information to observe and admit objects, portion recurrent neural networks (RNNs) grip driving's temporal aspects.

Deep learning is pivotal successful code designation and procreation applications. Virtual assistants employment heavy learning for close dependable bid mentation and response, enabling functionalities similar mounting reminders and controlling astute location devices.

Deep learning's interaction spans assorted industries, bringing advancements successful healthcare diagnostics, fiscal security, lawsuit work automation, and autonomous driving. Its quality to process and larn from unstructured information enables innovative solutions and enhances operational ratio crossed aggregate domains.

Challenges and Advantages

Deep learning offers awesome advantages, positioning it arsenic a game-changer. One cardinal payment is its high accuracy, often outperforming accepted instrumentality learning models successful tasks requiring analyzable information investigation and signifier recognition.

Another important vantage is automated diagnostic engineering. Deep learning models tin automatically uncover important features from datasets during training, streamlining the improvement pipeline and enhancing adaptability crossed divers tasks and datasets.

However, heavy learning comes with its ain acceptable of challenges. Data availability is simply a pressing issue, arsenic these models necessitate immense amounts of information to execute optimal performance. Obtaining labeled information indispensable for supervised learning adds different furniture of complexity.

The computational resources required for heavy learning are different important challenge. These models request high-powered hardware owed to their intensive mathematical computations, making it prohibitive for organizations with constricted entree to precocious computing infrastructure.

Interpretability remains a captious situation successful heavy learning. The "black box" quality of heavy neural networks makes it hard to recognize and explicate their decision-making process, which is important successful delicate fields similar healthcare and finance.

Balancing these advantages with the inherent challenges provides a nuanced presumption of heavy learning. While the exertion has tremendous potential, it is indispensable to code the issues of information availability, computational requirements, and interpretability to unlock its afloat capabilities responsibly.

Future Trends successful Deep Learning

The aboriginal of heavy learning is poised to thrust singular advancements crossed aggregate domains, underpinned by innovations successful hardware, algorithms, and caller applications.

A important inclination is the improvement of much businesslike and almighty hardware designed for heavy learning requirements, specified arsenic Tensor Processing Units (TPUs) and customized AI chips. These advancements volition facilitate faster grooming times, reduced vigor consumption, and the quality to grip progressively larger datasets.

Algorithmic innovation is different driving force, with probe into attraction mechanisms, transformers, and neural architecture hunt (NAS) aiming to automate the plan of neural networks and optimize architectures for circumstantial tasks.

Emerging applications of heavy learning are apt to alteration galore industries, leveraging its capableness to process and analyse immense amounts of unstructured data. In healthcare, advancements successful personalized medicine volition trust heavy connected heavy learning, portion biology sciences volition payment from AI successful clime modeling.

Another country seeing accelerated advancement is integrating heavy learning with borderline computing, enabling real-time information processing and decision-making connected devices similar smartphones, IoT devices, and autonomous drones.

Ethical considerations and interpretability volition besides signifier heavy learning's aboriginal trajectory. Efforts to make explainable AI (XAI) and mitigate biases wrong datasets and models volition proceed to guarantee AI systems run transparently, fairly, and accountably.

In summary, heavy learning's trajectory is acceptable connected an upward curve, driven by breakthroughs successful hardware, revolutionary algorithms, and an expanding array of applications. As AI's integration into mundane beingness becomes much seamless, heavy learning volition considerably augment quality capabilities and societal progress.

Deep learning's quality to process unstructured data, execute unsupervised learning, and refine done layers of transformations propels it beyond accepted algorithms. It transforms earthy information into actionable insights, mimicking the cognitive functions of the quality brain, and opening doors to innovative solutions successful varied fields.

.png)

6 months ago

72

6 months ago

72

(2).png)

/cdn.vox-cdn.com/uploads/chorus_asset/file/25515570/minesweeper_netflix_screenshot.jpg)

English (US) ·

English (US) ·