Deep learning has importantly transformed earthy connection processing (NLP) by automating analyzable tasks that antecedently required extended manual effort. This nonfiction examines assorted heavy learning models and their applications, highlighting the advancements and challenges successful the field.

Introduction to Deep Learning successful NLP

Deep learning has go an indispensable portion of earthy connection processing (NLP) successful caller years. Unlike accepted methods that relied connected task-specific diagnostic engineering, heavy learning employs neural networks that streamline and automate these processes.

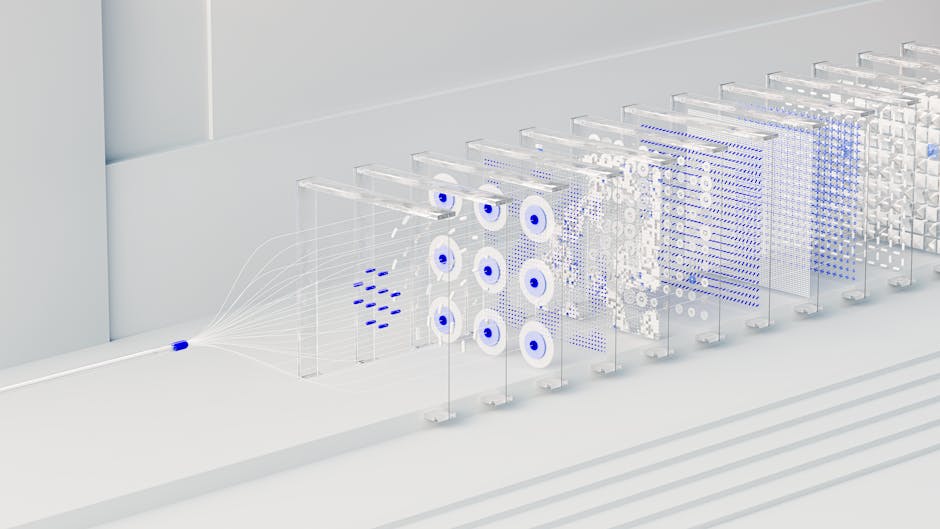

Neural networks, consisting of layers of neurons, mimic the mode the quality encephalon functions. Each neuron processes input information and passes it to the adjacent layer. Over time, the web learns to place patterns and marque predictions. This is peculiarly utile for NLP tasks, wherever language's intricacy often demands a nuanced, context-aware approach.

Word vector representations similar Word2Vec and GloVe alteration words into dense numerical vectors that seizure semantic meanings. This enables models to recognize relationships betwixt words, similar synonyms oregon analogies.

Types of Neural Networks successful NLP:

- Window-based neural networks: Consider a fixed fig of surrounding words to deduce meaning

- Recurrent neural networks (RNNs): Excel astatine handling sequences, preserving temporal bid of words

- Recursive neural networks: Apply weights recursively, turning hierarchical structures into meaningful representations

- Convolutional neural networks (CNNs): Capture section dependencies successful substance utilizing sliding filters

- Transformers: Employ "attention" mechanisms to measure connection relevance, effectual for semipermanent dependencies

Training these models involves feeding them ample amounts of substance information and utilizing techniques similar backpropagation to minimize prediction errors. Adjustments to the models proceed until they execute astatine a precocious level of accuracy.

The value of heavy learning successful NLP is evident successful applications similar instrumentality translation, sentiment analysis, and chatbots. By automating diagnostic extraction and leveraging ample datasets, heavy learning models person made important advancement successful knowing and generating quality language.1

Key Deep Learning Models for NLP

Key heavy learning models for NLP see Convolutional Neural Networks (CNNs), Recurrent Neural Networks (RNNs), Long Short-Term Memory (LSTM) networks, and Transformers. Each offers unsocial architectural advantages suited to circumstantial NLP tasks.

| CNNs | Apply aggregate filters to input connection vectors | Sentence classification |

| RNNs | Handle sequential data, clasp accusation crossed clip steps | Language modeling, substance generation |

| LSTMs | Introduce gating mechanisms to power accusation flow | Learning semipermanent dependencies |

| Transformers | Employ self-attention mechanisms | Various NLP tasks (e.g., BERT for question answering, GPT for substance generation) |

BERT excels successful tasks requiring heavy bidirectional understanding, specified arsenic question answering and connection inference. GPT is optimized for substance procreation and connection modeling, wherever it generates coherent and contextually applicable substance by predicting the adjacent connection successful a sequence.

These models leverage ample amounts of grooming information and almighty computational techniques to optimize their performance. The continuous improvement of these models ensures they stay astatine the forefront of NLP probe and applications.2

Applications of Deep Learning successful NLP

Deep learning's versatility successful NLP has led to its adoption successful assorted real-world applications:

- Sentiment Analysis: Determines the affectional code down text, specified arsenic classifying lawsuit reviews

- Machine Translation: Improves automated translation services, accounting for discourse and grammar

- Named Entity Recognition (NER): Extracts circumstantial accusation similar names, organizations, and locations

- Text Generation: Creates contented similar emails, articles, and creator works

- Chatbots: Simulates natural, conversational interactions for lawsuit work automation

Deep learning successful NLP enables the instauration of comprehensive, integrated systems that harvester aggregate techniques. For example, a azygous exertion mightiness harvester sentiment analysis, instrumentality translation, and chatbots to supply multilingual lawsuit enactment that understands and adapts to the user's affectional state.

"As models proceed to larn from expansive datasets and refine their predictive capabilities, their show connected analyzable connection tasks volition improve, further embedding AI into mundane life."Case Studies:

Yelp has implemented heavy learning for sentiment investigation to amended recognize and categorize idiosyncratic reviews. Duolingo uses instrumentality translation models to make much engaging and close connection lessons.3

Challenges and Limitations

Deep learning successful NLP faces respective challenges and limitations:

- Data Sparsity: Large neural networks necessitate extended datasets, which tin beryllium resource-intensive to get and annotate

- Language Ambiguity: Words and sentences often transportation aggregate meanings depending connected context, challenging adjacent blase models

- Computational Complexity: Training and deploying ample models demands important computational powerfulness and energy

- Common Sense and Contextual Understanding: Models whitethorn excel successful processing syntactic operation but conflict with deeper semantic comprehension

Potential improvements include:

- Transfer learning to fine-tune models connected domain-specific datasets

- Advances successful unsupervised and semi-supervised learning to trim dependency connected labeled data

- Developing algorithms that amended grip ambiguity and context

- Innovations successful exemplary architecture, specified arsenic pruning and quantization, to streamline models

Addressing ethical considerations and bias is critical. Current models often inherit biases contiguous successful their grooming data, starring to unfair outputs. Curating balanced datasets and processing algorithms that observe and mitigate bias are indispensable steps toward creating trustworthy and just AI systems.4

Future Trends successful Deep Learning for NLP

Several developments are shaping the aboriginal of heavy learning for earthy connection processing (NLP):

- Scalable pre-trained models: Large connection models (LLMs) similar GPT-3 person demonstrated the imaginable of pre-training connected extended datasets. Future models purpose to amended prime and versatility of NLP applications.

- Multimodal approaches: Combining substance with different information forms specified arsenic images, audio, and video offers a much broad knowing of contextual information. This integration allows AI systems to construe accusation much holistically.

- Integration with different AI technologies: Combining NLP with advances successful machine vision, robotics, and reinforcement learning tin pb to much blase and contextually alert AI systems.

- Knowledge integration: Incorporating outer cognition sources, specified arsenic databases oregon cognition graphs, into NLP models addresses limitations similar deficiency of communal consciousness and factual inaccuracies.

- Continuous learning and adaptation: Models that germinate based connected caller information and idiosyncratic feedback guarantee relevance and effectiveness implicit time. Techniques similar federated learning alteration ongoing exemplary betterment portion preserving information privacy.

- Collaborative AI: Multiple models oregon agents moving unneurotic to lick analyzable problems person imaginable to heighten wide AI strategy capabilities.

- Ethical and unbiased AI: Developing models that execute good and run reasonably and transparently is progressively critical. Focus is connected creating algorithms that place and mitigate biases successful grooming information and amended exemplary interpretability.

These trends are apt to pb to much capable, versatile, and ethical NLP systems, driving innovation and creating caller possibilities successful AI applications. Recent studies person shown that multimodal models tin execute up to 20% betterment successful accuracy compared to text-only models1.

"The aboriginal of NLP lies successful the seamless integration of aggregate modalities and the quality to continuously larn and adapt." – Dr. Emily Chen, AI Research ScientistDeep learning's interaction connected NLP is driving innovations that marque exertion much responsive to quality language. As models evolve, their improved abilities to recognize and make substance volition further integrate AI into mundane applications. For instance:

- Advanced chatbots susceptible of engaging successful nuanced conversations

- More close connection translation systems

- Sophisticated contented procreation tools for assorted industries

The imaginable applications are vast, ranging from healthcare to acquisition to lawsuit service. As these systems go much refined, they person the imaginable to revolutionize however we interact with exertion and process information.

Writio: The eventual AI contented writer for websites. This nonfiction was written by Writio.

.png)

5 months ago

59

5 months ago

59

/cdn.vox-cdn.com/uploads/chorus_asset/file/25515570/minesweeper_netflix_screenshot.jpg)

English (US) ·

English (US) ·