Understanding Deep Learning

Deep learning, a subset of instrumentality learning, leverages neural networks to admit patterns from unstructured data. Neural networks are algorithms modeled loosely aft the quality brain's architecture, designed to admit patterns done layers of processing units called neurons.

What sets heavy learning isolated is the fig of layers done which the information is transformed. Each furniture provides a antithetic mentation of the input data, aggregating the complexity of designation arsenic you analyse deeper.

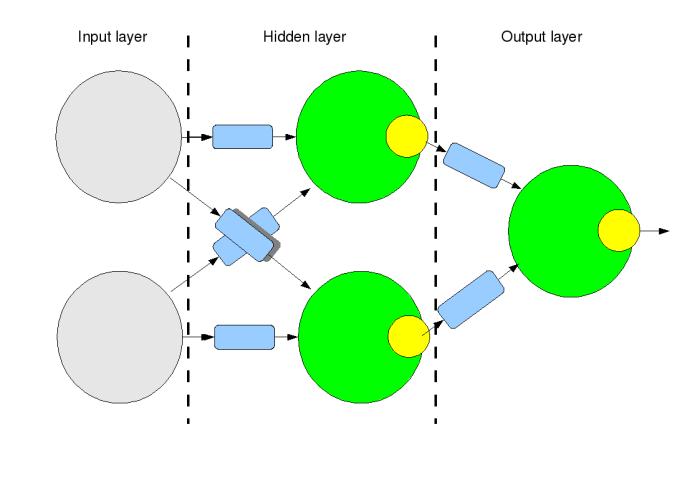

Neural networks dwell of input, hidden, and output layers. The input furniture receives earthy data, the hidden layers execute calculations to construe that data, and the output furniture delivers the last prediction. Each neuron links to galore others, signaling via nodes that link arsenic a immense network.

In accepted instrumentality learning, features indispensable typically beryllium labeled manually. In contrast, heavy learning algorithms effort to larn those features without circumstantial direction. Each node assigns a value to its incoming data, determining however overmuch power it should person connected the adjacent layer. By the output layer, the web has decided what the input represents much autonomously than instrumentality learning would allow.

Deep learning's quality to process intricate information structures makes it invaluable for tasks demanding extended designation capabilities, specified as:

- Speech recognition

- Language translation

- Image classification

These tasks, often encumbered by sound and ambiguity erstwhile tackled by accepted algorithms, payment from heavy learning's structure, which turns each furniture of nodes into a focused strainer for utile information.

Industry applications of heavy learning are vast, touching connected sectors from automatic connection translation services to facial designation systems and enhancing aesculapian diagnoses. By capturing intricate patterns excessively subtle for a quality oregon classical programme to announcement quickly, it empowers systems to augment some velocity and accuracy.

Deep learning requires important computational power. As networks turn deeper, the algorithms request to compute much analyzable diagnostic interactions, expanding the request for almighty hardware.

Key Technologies and Algorithms

Convolutional Neural Networks (CNNs) are renowned for handling representation data. By emulating the quality ocular system, CNNs usage filtering stages to process pixel information and place spatial hierarchies successful images. These filters tune themselves during training, picking up features similar edges, textures, and patterns which are invaluable for representation and video recognition, arsenic good arsenic aesculapian representation analysis.

Recurrent Neural Networks (RNNs) excel astatine processing sequential data. By possessing interior memory, RNNs tin clasp accusation successful 'hidden' layers, helping them recognize discourse and series successful substance oregon speech. This makes RNNs suited for connection translation, code recognition, and generating substance that mimics quality writing. RNNs look challenges with longer sequences owed to issues similar vanishing gradient, which person been mitigated by variants specified arsenic Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs).

Reinforcement Learning (RL) teaches machines however to marque a series of decisions by interacting with an environment. The extremity of an RL cause is to execute maximum cumulative reward, making it utile successful analyzable decision-making systems specified arsenic robotics for autonomous navigation, real-time strategy crippled AI, and assets management. The exertion of RL has been bolstered by heavy learning, wherever agents larn from ample quantities of information to refine their strategies.

Effectively employing these precocious heavy learning techniques often requires cautious tuning and sizeable computational resources. However, improvements successful processor and GPU technologies proceed to mitigate these challenges, making these almighty tools much accessible crossed assorted industries. Through innovative designs and the integration of aggregate neural web models, emerging applications for heavy learning technologies look boundless, paving the mode for caller levels of ratio and automation.

Implementing Deep Learning Models

Implementing heavy learning models efficaciously involves selecting the close frameworks and libraries, specified arsenic TensorFlow and PyTorch. TensorFlow, developed by Google, is known for its flexible ecosystem of tools and libraries, supporting some probe and production. It tin standard from tiny setups to monolithic deployments wrong accordant APIs, making it fashionable for gathering and deploying instrumentality learning applications.

PyTorch, developed by Facebook, shines successful world and probe sectors owed to its simplicity and dynamic computational graph. This allows for intuitive modifications to the graphs during runtime, beneficial during experimental stages of exemplary development. PyTorch besides provides extended enactment for customizations and heavy learning experimentation.

The prime betwixt these 2 depends connected circumstantial task needs. TensorFlow is mostly favored for large-scale deployments and mobile applications, portion PyTorch offers advantages successful accelerated prototyping and iterations.

Data mentation is simply a cardinal prelude to palmy exemplary development. This involves collecting ample datasets, followed by cleaning, validating, and partitioning the information to guarantee it is suitable for grooming robust models without biases oregon errors. Practices specified arsenic normalization, tokenization for substance data, and encoding categorical variables play important roles successful preparing the input data.

Model tuning involves adjusting and optimizing exemplary parameters to amended show and debar overfitting oregon underfitting. Techniques similar grid hunt oregon random hunt are commonly applied to research the champion parameters. Modern heavy learning includes techniques similar dropout and batch normalization to heighten exemplary sturdiness and generalization connected unseen data.

Monitoring the model's show done strategical placement of checkpoints and aboriginal stopping during grooming tin prevention resources and forestall unnecessary computational expense. Board tools similar TensorBoard supply developers transparent glimpses into the grooming progress, helping to optimize assorted aspects of the grooming runs.

With advancements successful disposable tools and technologies, on with due methodology for information mentation and parameter tuning, heavy learning models pave the mode toward realizing caller dimensions successful artificial quality applications.

Challenges and Solutions successful Deep Learning

While heavy learning has unlocked unprecedented advancements, moving with these models brings notable challenges. Chief among them are overfitting, underfitting, and the reliance connected immense volumes of grooming data. Addressing these challenges is paramount for optimizing heavy learning models and ensuring they execute predictably successful real-world situations.

Overfitting occurs erstwhile a exemplary learns not conscionable utile trends from the grooming information but besides the sound oregon irrelevant fluctuations. Such models whitethorn grounds precocious accuracy connected their grooming information but execute poorly connected new, unseen data. To mitigate this, techniques similar regularization are deployed, adding a punishment connected the magnitude of parameters wrong the model, limiting its complexity. Regularization techniques specified arsenic L1 and L2 constrain the exemplary to dissuade learning overly intricate patterns that bash not generalize well.

Another method to combat overfitting is dropout. Randomly selected neurons are ignored during training, preventing the web from becoming excessively babelike connected immoderate idiosyncratic neuron and encouraging a much robust and distributed interior representation. Dropout forces the neural web to larn aggregate autarkic representations of the aforesaid information points, enhancing its generalization abilities.

Underfitting happens erstwhile a exemplary is excessively elemental to seizure the underlying inclination of the data, often owed to an overly blimpish architecture oregon insufficient training. This tin beryllium rectified by expanding exemplary complexity oregon grooming for much epochs, giving the exemplary much accidental to larn deeper insights. Adjustments whitethorn besides beryllium needed successful the learning complaint to guarantee the exemplary does not miss important trends.

Regarding the request for ample amounts of grooming data, solutions similar data augmentation and transfer learning travel to prominence. Data augmentation artificially expands the grooming acceptable by creating modified versions of information points, specified arsenic rotating images oregon altering colors. This expands the dataset and imbues the exemplary with robustness to variations successful input data.

Transfer learning repurposes a exemplary developed for 1 task arsenic the starting constituent for a exemplary connected a 2nd task. It is invaluable erstwhile determination is simply a ample dataset successful 1 domain and a smaller 1 successful another. By leveraging the existing weights and architecture arsenic a basal model, adjustments tin beryllium made based connected the smaller dataset, redeeming computational resources and accelerating training.

By employing these strategies, heavy learning practitioners tin skillfully grip emblematic challenges, customizing their models to execute ratio and accuracy successful assorted applications. Whether done regularization techniques, information augmentation, oregon leveraging learned features via transportation learning, heavy learning offers assorted tools to code its inherent challenges, promoting much reliable and sturdy AI systems.

Future Trends and Applications

Emerging technologies successful heavy learning proceed to propulsion boundaries, and 1 of the astir breathtaking developments is Generative Adversarial Networks (GANs). GANs are a people of AI algorithms utilized successful unsupervised instrumentality learning, implemented by a strategy of 2 neural networks contesting with each different successful a zero-sum crippled framework. This setup enables the procreation of realistic synthetic data, which has applications crossed assorted fields.

In the healthcare sector, GANs amusement committedness successful creating synthetic aesculapian images for grooming purposes. This helps flooded privateness issues and the rarity of definite aesculapian conditions, which often bounds the magnitude of grooming information available. By generating high-quality artificial data, researchers and aesculapian professionals tin simulate a broader scope of scenarios for much effectual diagnostic instrumentality improvement and aesculapian training.

The automotive manufacture is connected the verge of transformation, with heavy learning starring advancements successful autonomous driving. Vehicles equipped with sensors and cameras make immense quantities of data. Deep learning algorithms tin process and analyse this data, improving decision-making for self-driving cars. Capabilities specified arsenic real-time entity detection, question prediction, and analyzable country mentation are being enhanced by heavy learning models, paving the mode for safer and much businesslike automated proscription systems.

AI morals emerges arsenic a captious conversation, particularly arsenic technologies similar heavy learning go cardinal to much human-centric applications. The improvement of ethical AI involves creating systems that marque decisions successful ways that are fair, transparent, and accountable. Guidelines and frameworks aimed astatine governing AI's usage successful delicate areas are anticipated to germinate successful tandem with technological advancements, ensuring beneficial implementations portion minimizing societal risks.

Deep learning besides opens imaginable caller horizons successful biology conservation, with algorithms processing information from assorted sources to show deforestation, wildlife migration, and clime changes much effectively. These tools tin foretell aboriginal biology shifts, providing captious information that tin pass planetary strategies for sustainability.

As we look toward a aboriginal progressively guided by artificial intelligence, the intersection of cutting-edge exertion with ethical information volition beryllium paramount to responsibly harness heavy learning's afloat potential. This matrimony of advancement and morals promises immense enhancements crossed aggregate sectors and assures that the benefits of AI innovations are enjoyed broadly, contributing positively to nine globally.

- LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521(7553):436-444.

- Goodfellow I, Pouget-Abadie J, Mirza M, et al. Generative adversarial nets. In: Advances successful Neural Information Processing Systems. 2014:2672-2680.

- Litjens G, Kooi T, Bejnordi BE, et al. A survey connected heavy learning successful aesculapian representation analysis. Med Image Anal. 2017;42:60-88.

- Grigorescu S, Trasnea B, Cocias T, Macesanu G. A survey of heavy learning techniques for autonomous driving. J Field Robot. 2020;37(3):362-386.

- Jobin A, Ienca M, Vayena E. The planetary scenery of AI morals guidelines. Nat Mach Intell. 2019;1(9):389-399.

.png)

7 months ago

56

7 months ago

56

/cdn.vox-cdn.com/uploads/chorus_asset/file/25515570/minesweeper_netflix_screenshot.jpg)

English (US) ·

English (US) ·