Convolutional Neural Networks (CNNs) person go indispensable tools successful machine vision, enabling advancements successful representation and video recognition. Understanding the halfway components and mechanisms of CNNs reveals their interaction crossed assorted applications, from medical imaging to autonomous vehicles.

Core Components of CNNs

Convolutional Neural Networks (CNNs) dwell of respective cardinal layers:

- Convolutional layers: Apply filters to the input representation to observe features. These filters descent crossed the image, computing a dot merchandise betwixt the filter and the input's pixel region, resulting successful diagnostic maps.

- Pooling layers: Reduce the spatial dimensions of diagnostic maps, making the information easier to process and little prone to overfitting. Max pooling selects the maximum worth from each region, portion mean pooling computes the mean value.

- Activation functions: Introduce nonlinearity into the network, enabling it to larn analyzable patterns. The Rectified Linear Unit (ReLU) is commonly used, mounting antagonistic values to zero and keeping affirmative values unchanged.

- Fully connected layers: Operate similar a accepted neural network, connecting each neuron to each neuron successful the preceding layer. They execute the last mapping to output classes, translating extracted features into predictions.

These components enactment unneurotic to process and construe ocular information with accuracy, making CNNs almighty tools successful representation and video designation tasks.

How Convolutional Layers Work

Convolutional layers are cardinal to CNN functionality. They usage filters (kernels) to observe patterns wrong input images. These filters are tiny matrices that seizure circumstantial features similar edges, texture, and shapes.

During convolution, filters descent crossed the input image, performing a dot merchandise cognition betwixt the filter's weights and the pixel values. This generates a diagnostic representation emphasizing detected features. The stride determines however the filter moves crossed the image, affecting the spatial solution of the diagnostic map.

Parameter sharing is simply a cardinal conception successful convolutional layers. Unlike afloat connected layers, filters successful convolutional layers usage the aforesaid acceptable of weights portion scanning antithetic parts of the image. This reduces the fig of parameters required, making the web much businesslike and little prone to overfitting.

Padding addresses the borderline occupation wherever filters cannot adequately screen representation edges. Adding other pixels astir the representation borderline (typically zeros) maintains the output size. Different padding strategies include:

- Valid Padding: No padding applied

- Same Padding: Padding ensures the output diagnostic representation has the aforesaid magnitude arsenic the input

- Full Padding: Padding extends input dimensions, expanding output size

By adjusting stride and padding parameters, CNNs tin beryllium adapted for assorted tasks and grip images of antithetic sizes and resolutions.

Training and Optimization successful CNNs

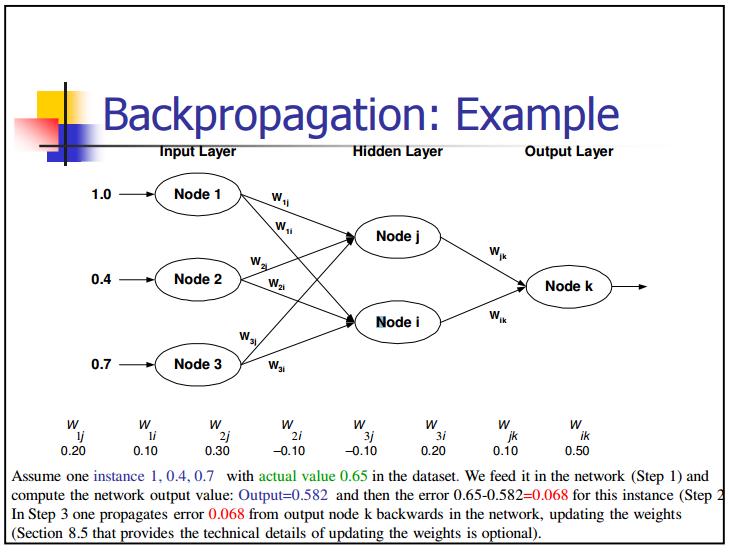

Training CNNs involves optimizing web parameters utilizing backpropagation and gradient descent techniques. Backpropagation computes gradients by propagating mistake backward done the network, portion gradient descent adjusts parameters based connected these gradients to minimize the nonaccomplishment function.

The Rectified Linear Unit (ReLU) activation relation introduces nonlinearity, enabling the web to exemplary analyzable patterns by mapping antagonistic values to zero and leaving affirmative values unchanged.

To combat overfitting, respective regularization techniques are employed:

| Dropout | Randomly sets a fraction of neurons to zero during training, preventing over-reliance connected peculiar neurons. |

| Batch Normalization | Normalizes inputs of each furniture for accordant mean and variance, stabilizing grooming and allowing higher learning rates. |

| L2 Regularization | Adds a punishment word to the nonaccomplishment function, encouraging smaller weights without driving them to zero. |

| Early Stopping | Monitors show connected a validation acceptable and stops grooming erstwhile show plateaus. |

These techniques, combined with cautious hyperparameter tuning, let CNNs to larn and generalize efficaciously from data.

Applications of CNNs

CNNs person demonstrated exceptional show crossed galore real-world applications:

- Image Classification: CNNs categorize images with precocious accuracy, powering auto-tagging features successful platforms similar Google Photos.

- Object Detection: Used successful self-driving cars to admit and way pedestrians, vehicles, and obstacles successful real-time.

- Facial Recognition: Employed successful smartphone unlocking mechanisms and societal media photograph tagging suggestions.

- Medical Image Analysis: Assists successful diagnosing conditions by analyzing X-rays, MRIs, and CT scans, detecting tumors and identifying abnormalities.1

- Retail: Facilitates automated support scanning and inventory absorption utilizing drones equipped with CNNs.

- Agriculture: Contributes to harvest monitoring and output prediction by analyzing drone-captured images of fields.

- Aerospace: Assists successful identifying structural damages successful craft exteriors during inspections.

- Environmental Monitoring: Analyzes outer imagery to way deforestation, show urbanization, and measure earthy disasters.

As AI and heavy learning proceed to advance, the scope of CNNs is expected to broaden, driving innovation crossed assorted domains and contributing to modern society. Recent studies person shown that CNNs tin execute human-level show successful definite representation classification tasks, with mistake rates arsenic debased arsenic 3.5%.2

Challenges and Limitations of CNNs

Convolutional Neural Networks (CNNs) look respective challenges contempt their awesome capabilities:

- Computational complexity: Training a CNN involves millions of parameters and requires important computational power, necessitating precocious hardware similar GPUs oregon TPUs.

- Data requirements: CNNs request immense amounts of labeled grooming information to debar overfitting and execute precocious performance.

- Interpretability: Often described arsenic "black-box" models, CNNs connection constricted penetration into their decision-making process.

- Scarcity of labeled data: In specialized domains, obtaining ample labeled datasets tin beryllium resource-intensive and time-consuming.

The request for ample labeled datasets is peculiarly challenging successful specialized domains similar aesculapian imaging, wherever labels often necessitate adept cognition and extended manual effort. Techniques similar transportation learning and semi-supervised learning are employed to code this, but they present their ain acceptable of challenges.

"While CNNs person transformed galore industries with their quality to analyse ocular data, they travel with inherent challenges that necessitate cautious information and innovative solutions."Addressing these limitations involves balancing computational demands, managing extended information requirements, tackling overfitting, enhancing interpretability, and handling the complexities of ample labeled datasets.

Case Study: Enhancing Nasal Endoscopy with CNNs

Researchers from Ochsner Health person developed a CNN exemplary to amended the precision and ratio of nasal endoscopy diagnostics. This lawsuit survey examines the methodology, results, and implications of leveraging CNNs successful this aesculapian domain.

Methodology:

- Model: YOLOv8 entity detection model

- Task: Identify and conception captious anatomical landmarks (inferior turbinate and mediate turbinate)

- Dataset: 2,111 nasal endoscopy images from 2014-2023

- Training: Transfer learning, hyperparameter fine-tuning, backpropagation, and stochastic gradient descent

Results:

| Average Accuracy | 91.5% |

| Average Precision | 92.5% |

| Average Recall | 93.8% |

| Average F1-score | 93.1% |

These results were achieved astatine a 60% assurance threshold.

Implications:

For clinicians and otolaryngologists, the deployment of CNNs successful nasal endoscopy tin heighten diagnostic accuracy, trim mentation errors, and streamline the diagnostic process. For trainees and non-specialists, CNNs tin assistance successful overcoming the steep learning curve associated with knowing the analyzable anatomy of the nasal cavity.

The occurrence of this lawsuit survey suggests broader implications for the usage of CNNs successful different areas of aesculapian imaging. As the models are refined and adapted for assorted imaging modalities, their imaginable to heighten diagnostic precision, streamline workflows, and compensate for the scarcity of specialized aesculapian expertise becomes much apparent.

As probe continues to advance, CNNs volition apt play an progressively important relation successful enhancing diagnostic precision and streamlining workflows crossed galore fields. The integration of CNNs successful aesculapian imaging represents a important measurement towards much accurate, efficient, and accessible healthcare diagnostics.

Writio: Your virtual contented writer creating high-quality articles for blogs and websites. This nonfiction was written by Writio.

.png)

5 months ago

90

5 months ago

90

/cdn.vox-cdn.com/uploads/chorus_asset/file/25515570/minesweeper_netflix_screenshot.jpg)

English (US) ·

English (US) ·